All Star Showdown – The 2016 All Star Vs. The 2015 All Star

- Written by Staff

- Published in Gear

- Comments::DISQUS_COMMENTS

Starboard team riders Zane Schweitzer and Fiona Wylde practicing their sprints on the new 2016 Starboard gear. | Photo: John Carter

Starboard team riders Zane Schweitzer and Fiona Wylde practicing their sprints on the new 2016 Starboard gear. | Photo: John Carter

Written by Larry Cain

I remember seeing a few internet posts in the winter in which there was some speculation on whether the 14’ x 25” 2016 Starboard All Star was as fast as the 2015 model in flat water. I’m here to put any speculation to rest. Forget about it. It’s a lot faster.

Developing the Test Protocol

When testing boards the first thing you need to do is come up with some type of coherent test protocol. You start by asking yourself, “what are we looking for?” As most SUP races are distance races, ranging anywhere from 5 to 30 miles, you want to come up with a test that assesses the board’s speed and efficiency over that distance, not a short, all out sprint. The trouble is, if you’re testing over that distance you can’t fairly test two or more boards in one day without the test paddler’s fatigue becoming a factor. You need to come up with a way to simulate the pace paddled in races of these lengths without actually paddling the distance. This allows you assess the board’s performance for distance races, without test paddler fatigue becoming a factor.

So what controls can you establish? Well, the quality of the water you’re testing on is an obvious one. Conditions need to be consistent. Conditions affecting time are wind, current, water temperature and to a lesser extent air temperature, humidity and barometric pressure. Your tests should be run in calm, sheltered water and boards should be tested sufficiently close to each other to minimize the chance of changes in any of these variables.

At the same time, there needs to be some controls placed on the test paddler to ensure consistency of effort from one test to the next. We can’t easily control power output, but we can control stroke rate and heart rate, both indicators of the test paddler’s effort.

With those controls in place it is just a question of how long to test for and what data to collect. You want to test long enough that any differences between boards are discernable and outside the range of experimental error. And, you want to collect every piece of meaningful data that you can reliably measure with the equipment you have available.

Fortunately, I have access to some pretty sophisticated monitoring devices through the Canadian Canoe-Kayak Team. Most national teams in sprint canoe-kayak send their crews out on the water in races carrying a small GPS/accelerometer device that allows meaningful data to be reliably collected for analysis. These devices can also be used in training to assess the quality of training and the degree to which training replicates race conditions. The devices the Canadian Team uses are called “SPIN units” and they collect a lot of interesting data.

The Test Protocol

I settled upon a three-minute test, paddled at an aggressive race pace to test the performance of each board and it’s suitability for a flat-water distance race. This pace was faster than I’d go for an entire race, but one I’d commonly use for parts of a race. For any test to offer valid information it needs to be repeatable with the same or similar results in each test, so I ran the test and collected data three times over a one week period. I would have preferred to run more trials, but had limited access to the SPIN unit. I waited for reasonably calm conditions and ensured that the conditions remained constant from the test of one board to the test of the next. I figured three minutes should be a long enough time to show one board being faster than another, and if it wasn’t, I would stretch the test out for a longer period of time.

To minimize the effect of warm-up/lack of warm-up and fatigue, I changed the order in which the boards were tested for the first two tests and flipped a coin to see which I’d test first on the third test.

I also decided to run a “max speed test”. This was performed quite differently. I removed any control on heart rate and stroke rate and just paddled all out for 30 seconds, allowing myself a three stroke build up before collecting data to minimize the effect of a good or bad start.

Starboard team riders Connor Baxter and Zane Schweitzer testing the All Star on Maui. | Photo: John Carter

Starboard team riders Connor Baxter and Zane Schweitzer testing the All Star on Maui. | Photo: John Carter

Data Collected (3-Minute Test)

I was interested in collecting and comparing data that would indicate a difference in performance between the two boards. As it turns out I was also able to collect and compare data that provides interesting insight into how well each board can be paddled, which in turn affects performance. Below is a summary of the data collected and compared:

- Average Velocity: This is pretty straightforward and what we’re ultimately interested in. This is the average velocity for the three-minute piece and is collected in meters/second (m/s). I have converted it to kilometers/hour (km/h) as it is an easier unit for most people to understand.

- Average Pace/Km: This is the average pace for the three-minute piece expressed as minutes/km. This is the measure I normally use on my GPS when I’m paddling and that makes the most sense to me.

- Average Stroke Rate: This represents the average stroke rate or cadence for the three-minute piece. Ideally this should be the same in both tests. In fact there was usually a slight difference in stroke rate from board to board and test to test, despite my best efforts to keep it the same. The differences were small and I normalized them by dividing velocity by stroke rate in each test.

- Average Distance per Stroke: This value represents the average distance the board travels in each stroke during the three-minute test and, with stroke rate controlled, represents a good indicator of each board’s ability to glide and maintain speed between strokes.

- Average % Positive Acceleration per Stroke: This is the average amount of positive acceleration in each stroke for the three-minute test, expressed as a percentage of the total acceleration (positive and negative or deceleration). Given that we call the acceleration curve for the average stroke for each paddler a “stroke profile” and that it is like a fingerprint for each paddler, one would intuitively think that this would be more a function of the paddler’s technique than the board and would be fairly consistent from board to board.

- Peak Velocity: Though not as important in the three-minute test as the max speed test, I though it would still be interesting to see which board reached the fasted speed in the three-minute test done at constant stroke rate. Again, this was expressed in m/s and I converted it to km/h.

- Peak Pace: This is simply max speed for the three-minute test expressed as time per kilometer.

- Distance: This is the total distance in meters paddled in the three-minute test

- Ave Velocity/Stroke Rate x 100: This is my attempt to normalize average speed for differences in average stroke rate for each test. The greater the value, the better.

- Stroke Effectiveness (SE): This is a key indicator of performance for most national teams in canoe-kayak. After collecting data like distance per stroke, time to peak acceleration, ave % positive acceleration, peak acceleration, etc. for numerous time controls and races in canoe-kayak and comparing the data against performance it was somewhat surprising to learn that none of these pieces of data have a strong enough correlation to performance to be considered key indicators of performance. Some of the fastest athletes had very short time to peak acceleration, others much longer. Some had great distance per stroke, others much less. There was no one bit of data that seemed to correlate directly with average velocity over a given distance.

However one piece of data that could be generated from data collected, stroke effectiveness (SE), had a very high correlation to speed and performance and, if the paddler could reliably produce a consistently high SE in training was a good predictor of performance potential. Stroke effectiveness is arrived at by multiplying velocity by distance per stroke. It turns out the distance per stroke is somewhat irrelevant when considered alone as distance per stroke values are generally higher at lower stroke rates. Yet nobody wins races with the low stroke rates required to produce high distance per stroke values. If we assume the stroke rate rises in some fashion as velocity rises, we can assess the quality of the stroke producing that velocity by multiplying the distance per stroke by the velocity. This turns out to be a strong indicator of performance. So I was curious, would one board allow someone to paddle with greater stroke effectiveness than the other?

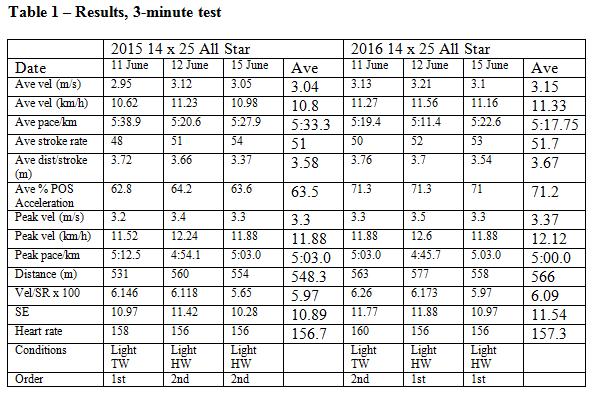

The results are summarized in the table below:

I also decided to quickly run a test to see which board seemed to have the greatest capacity for speed in a short sprint. Though less important to those racing distance races, it is still a beneficial quality for a board to have. The ease with which a board accelerates, and max speed it can attain for short bursts, can have an impact on the racer’s ability to catch a bump or a draft train, get into a turn first, get off the start quickly or win a sprint to the finish.

For this test I decided to do 30-second all out sprints and allowed myself a three-stroke running start. With the board moving slightly this would be a more relevant test of the board’s ability to accelerate, as it would better represent all of the dynamic accelerations required in a race except for the start.

Again, I attempted to ensure that conditions were constant and that the tests were performed in the same stretch of water. I collected all the same data as in the 3-minute test but also added the time to peak velocity which is an indicator of the board’s ability to accelerate. As these were all out tests I did not bother to attempt to control heart rate so it was not recorded. Also, I did not bother to control stroke rate but did record rate as it is easily retrieved from the SPIN device.

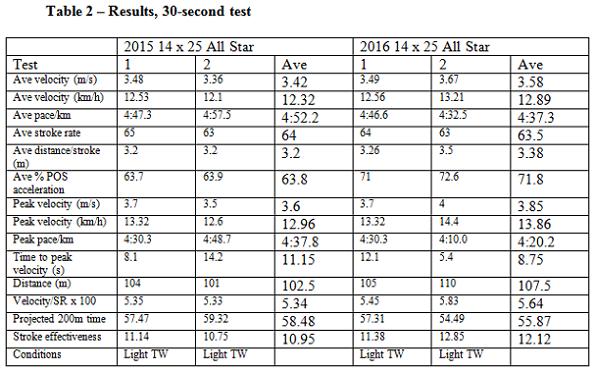

The results for the 30-second test are found in the table below:

Discussion

The 2016 All Star outperformed the 2015 in every measure. This wasn’t surprising. The board feels faster, and some of the data supports the contention that it is more “paddler friendly” when it comes trying to paddle fast. It’s worth taking a closer look at some of the data and also at the tests themselves, to explore whether or not they were sufficient to truly assess the differences between the boards.

In each of the 3-minute tests, the 2016 outperformed the 2015 in every single measure. In the first two tests the margins between the two boards were huge. In the first and second tests the average velocity of the 2016 was 6% and 3% faster than that for the 2015 respectively. In both tests the stroke rate was slightly faster on the 2016 (2 strokes/min in test one and 1 stroke/min in test two). Despite my best efforts to paddle at the same rate it is difficult to do it, even with feedback from a GPS device. This was anticipated and so in an effort to normalize speed for stroke rate I calculated a speed to stroke rate ratio for each test (velocity/stroke rate x 100). Even when velocity is normalized for stroke rate the 2016 performed substantially better, with the ratio being 2% and 1% greater in the first and second tests respectively.

In the third test I made a real effort to keep the stroke rate down on the 2016 board and actually paddled with a faster stroke rate on the 2015 (53 strokes/min to 54 strokes/min respectively). Yet the 2016 was still 1.5% faster over the 3-minute test, and when normalized for stroke rate the difference was a 3.5% advantage for the 2016.

When averaged over three tests, the average velocity was 3.6% greater for the 2016 and, when normalized against stroke rate the 2016 performed 2% better. Peak speeds reached in each of the tests were higher for the 2016, and the distance per stroke and total distance travelled in each test was greater for the 2016 as well.

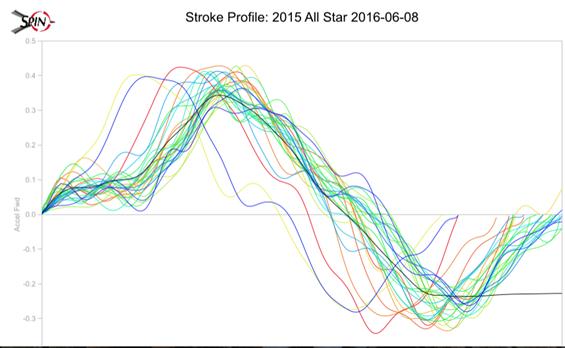

Surprisingly the average percentage of positive acceleration for each stroke (Ave % POS acceleration) was substantially greater for the 2016. Ave % POS acceleration is a measure of the average percentage of each stroke in which the board is accelerating vs. decelerating. Imagine an acceleration curve for each stroke like the one seen below:

Each different colored line represents the acceleration for a different stroke from the sample of strokes collected. The black line represents the average acceleration for the entire sample. Anything above the X-axis is positive or forward acceleration, while anything below is negative acceleration (or deceleration). The shape of the curve tends to be like a “fingerprint” for each paddler and describes the way their boat or board responds to the forces they produce during the stroke. If technique is consistent one would expect the shape of the curve and the relative proportions of acceleration to deceleration to remain nearly constant from board to board, with differences perhaps seen in the magnitude of the acceleration and deceleration. The % POS acceleration is represented by the area under the acceleration curve.

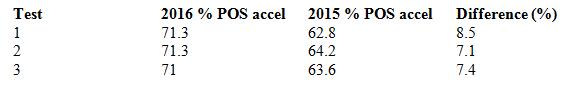

Surprisingly, the % POS acceleration values were consistently and dramatically higher on the 2016 All Star as summarized below:

This is a remarkable difference in the % POS acceleration between the two boards, with the minimum difference being 7.1%!

This got me thinking about the “paddleability” of the two boards. I am extremely familiar with both, having spent over 2000 km on the 2015 since June 1st 2015 and more than 800 km on the 2016 so far this year. Familiarity with and comfort on the boards should not be an issue. I’ve done enough paddling to know that my technique is consistent from test to test and board to board. Yet to me, the 2016 feels faster. It seems to climb out of the water and sit on top of it much more easily. It also seems easier for me to paddle with a faster stroke rate.

I have always been a paddler that prefers to paddle with power more than stroke rate. If you use a cycling analogy, I’d prefer to ride in a higher gear rather than a lighter gear. I’ve realized that if I’m going to improve and go faster, I need to develop more comfort in a lighter gear and paddle effectively with a higher stroke rate. The 2016 board seems to help me with that. Because it seems to sit so high in the water the strokes feel lighter, and the feeling of a lighter load makes it easier for me to put more strokes in in a sustainable fashion.

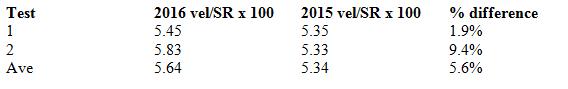

While I can feel that the 2016 seems to be more user friendly for me in terms of stroke rate, I can’t honestly say that I can feel that it accelerates for a greater portion of each stroke compared to the 2015. However the data doesn’t lie, particularly when the differences between the two boards in this metric is so large. For me at least, given the way I paddle, the 2016 board seems to have characteristics that make it easier for me to make it perform. In the 30-second test, the difference in average velocity between the two boards was negligible in the first test. In the second test it was dramatic. Stroke rates were slightly different, so the best way to compare the two boards is through the velocity to stroke rate ratio. Considering the velocity to stroke rate ratio for each board in the two tests we see:

Again, the 2016 outperforms the 2015 by a substantial margin. All other measures again show the 2016 to be a better performing board, and just like in the 3-minute test we see that the 2016 stays in positive acceleration for a longer period of each stroke by a sizeable margin.

While I believe strongly in the validity of the tests and the technology used to gather the data, it is fair to ask the question whether the sample size is enough to draw firm conclusions in each of the two tests.

For the 3-minute test I believe it is. Though I only had access to the SPIN unit for a few days since it was being used by the National Canoe-Kayak Team, I believe I could have run the test 100 times with the same outcome. I can feel the difference and, despite heart rate values being comparable for the tests performed on each board, the perceived exertion from the perspective of load on the paddling muscles feels less on the 2016. As much as I have grown to love the 2015 All Star in over 2000 km of paddling in all conditions, I feel even better on the 2016. When testing the 2017 All Star vs. the 2016, I’ll aim to run up to 10 trials between each board and should hopefully have less of a problem accessing the SPIN unit as it will be after the Olympics.

For the 30- second test there is, in my opinion, less certainty in the test results. While the 2016 outperformed the 2015 by a substantial margin when stroke rate was accounted for, the fact that it is a) a small sample size and b) I have not done much speed training making the data collected a little less meaningful and more random. Given the current state of my training and the inconsistency of my performance from one effort to the next in short sprints at this point in the year, I think it would be useful to have a much larger sample size for this test. I’ll aim to do at least 10 separate trials over 30 seconds when comparing the 2017 to 2016.

Starboard team riders Zane Schweitzer and Fiona Wylde testing the 2016 All Star. | Photo: John Carter

Starboard team riders Zane Schweitzer and Fiona Wylde testing the 2016 All Star. | Photo: John Carter

What is particularly impressive to me, and what gives me great confidence in the equipment I’m using is that I used the exact same test protocol and technology to test a variety of different boards in June 2015. As I was looking for a new board to ride I wanted to test as many as I could to find the one that would perform the best for me. The 2015 All Star was a hands down winner over the other brands I tested. The only board I tested that outperformed it was the 2015 Starboard Sprint.

Given that the 2016 All Star outperforms by a considerable margin the board that outperformed all the others I tested last year, I feel really confident that I am riding the fastest 25” board available. And given that it’s design allows it to really shine in rougher conditions I think I can say with confidence that I couldn’t be on a better board.

Staff

Submit your news, events, and all SUP info, so we can keep promoting and driving the great lifestyle of stand up paddling, building its community, and introducing people to healthier living.

Website: supconnect.com